pyspark wordcount

pyspark环境配置

参考:https://www.cnblogs.com/TTyb/p/9546265.html

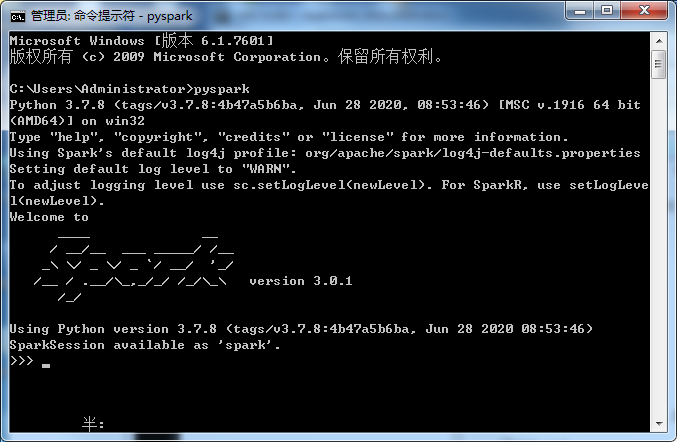

在命令提示符里输入pyspark验证,应该打印如下内容:

如果不成功,报如下错误,说明spark环境变量有问题。

1 | Failed to find Spark jars directory. |

windows下路径为:

1 | D:\spark-3.0.1-bin-hadoop2.7\bin |

也就是pyspark.cmd所在的目录一定要被包含到环境变量中。

测试程序

建立test.txt内容如下:

1 | abc |

测试程序如下:

1 | from pyspark import SparkContext |

打印结果:

1 | Lines with a: 2, lines with b: 2 |